Top 10 Web Scraping Tools in 2024

Web pages hold valuable data that can be challenging to gather daily for purposes like competitive analysis or research.

Web scraping tools are designed to ease this task.

Using these tools instead of manual scraping saves substantial time and effort, allowing teams to focus on other essential activities. It's important to choose the right tool, as risks like IP bans and data compatibility issues can arise.

This article will detail the top 10 web scraping tools for efficient data extraction from websites.

What is Web Scraping?

Web scraping is the process of extracting data from websites, including content such as text, images, and tables, and converting it into usable formats like Excel, Word, or databases. It’s a powerful tool for businesses and individuals alike, allowing them to gather data for analysis, competitive intelligence, or real-time updates.

To efficiently gather insights on market trends and consumer behaviors, companies use web scraping tools. These tools automate the scraping process and include features like IP proxy rotation and automated data enhancement to navigate around anti-scraping measures such as CAPTCHAs and rate limits.

Additionally, businesses often use deterrents like fingerprinting and rate limiting to protect their data, even if it is publicly accessible. Web scraping tools are specifically designed to counter these defenses, ensuring reliable data collection without technical disruptions.

Top 10 web scraping tools

Note: The tools below are listed in alphabetical order.

Apify

Apify is a robust web scraping platform that helps developers build, deploy, and monitor web scraping and browser automation projects. It's designed as a full-stack solution for data extraction, capable of gathering virtually any type of publicly available data from the internet.

Apify is unique because it not only provides tools for creating custom scraping agents but also offers a vast library of pre-built scrapers.

This platform is especially useful for tasks like market research, sentiment analysis, and lead generation.

Features:

Integrated proxy pool with smart IP rotation and automatic browser fingerprint mimicking.

Supports custom cookies and headers, along with an anti-bot bypass toolkit.

Compatibility with Python and JavaScript, including integration with libraries like Playwright, Puppeteer, Selenium, and Scrapy.

Pros:

Extensive library of over 1,500 ready-made web scraper templates.

Offers free web scraping courses, academies, and tutorials.

Reliable data extraction at any scale with numerous cloud service and web app integrations.

Highly rated for ease of use and flexibility, backed by extensive documentation.

Cons:

Customer support has been reported as suboptimal.

Some limitations in task concurrency, affecting simultaneous data extraction jobs.

Pricing:

Starts at $49 per month for the entry premium plan.

Have a free trial available to test its features.

Data Miner

DataMiner offers a convenient web scraping extension called Data Scraper for Google Chrome, empowering users to extract data directly from web pages within their browser environment. With its focus on simplicity and efficiency, DataMiner enables users to export scraped data into CSV files effortlessly.

Features:

Crawling automation for efficient data extraction.

Support for custom JavaScript scripts to enhance scraping capabilities.

Compatibility with all domains ensures broad applicability.

Additional functionalities include image downloading and support for click and scroll actions.

Pros:

Free live support sessions available for users.

User-friendly interface facilitates easy navigation and operation.

Cons:

Limited email support may hinder advanced troubleshooting.

Exclusive availability for Chrome users limits accessibility.

Advanced data retrieval tasks may require additional training at a fee.

Pricing:

Entry premium plan starts at $19.99 a month

Have a free plan option available for basic usage needs.

Octoparse

Octoparse is a top choice for its easy-to-use, no-code web scraping tools suitable for both technical and non-technical users. It efficiently turns unstructured web data into organized datasets, ideal for various business applications.

Features:

Simple point-and-click interface, accessible to everyone.

Handles both static and dynamic sites with support for AJAX, JavaScript, and cookies.

Manages complex tasks such as logins, pagination, and extracting data from hidden source code.

Allows time-specific scraping tasks through scheduled scraping.

Ensures 24/7 operation with cloud-based tools.

Features an AI-powered web scraping assistant for smarter data handling.

Pros:

Supports data-heavy websites with features like infinite scrolling and automatic looping.

Extensive documentation and support available in multiple languages including Spanish, Chinese, French, and Italian.

Outputs data in Excel, API, or CSV formats.

Cons:

No support for Linux.

Some features may be complex for beginners.

Pricing:

Free plan available with basic features.

Premium plans start at $75 per month.

ParseHub

ParseHub stands out as a versatile web scraping tool that simplifies data extraction with an easy-to-use interface suitable for both beginners and advanced users.

It’s available as a desktop application that enables data extraction from a wide range of web sources, including complex dynamic sites that rely on AJAX and JavaScript.

Targeted primarily at users needing to pull data behind logins, from maps, or tables, ParseHub supports projects of various complexities.

Features:

Data extraction from multiple pages, including dynamic content using AJAX and JavaScript.

Advanced data collection capabilities with REST API for integrating scraped data into web and mobile applications.

Scheduled data collection runs and automatic storage in the cloud.

Support for infinite scroll, pagination, and IP rotation to handle large-scale scraping tasks efficiently.

Regular expressions and customizable headers and cookies for precise data targeting.

Pros:

User-friendly interface that requires no coding skills.

Free version available, making it accessible for initial testing and small projects.

Robust data extraction capabilities that can handle complex websites and interactive elements.

Cons:

Primarily a desktop application which may limit accessibility compared to cloud-based solutions.

Known issues with bugs that can disrupt scraping activities.

Free plan limitations include low page count and duration restrictions, making larger projects difficult without upgrading.

Pricing:

Free plan covers up to 200 pages and 40 minutes per project.

Paid plans begin at $149 per month, offering additional features and higher data limits for extensive scraping needs.

Playwright

Playwright, developed by Microsoft, is a highly acclaimed headless browser library. Recognized for its extensive capabilities in both end-to-end testing and web scraping, it is designed to handle dynamic content effectively, making it an excellent choice for simulating complex user interactions on web pages. Its rich feature set supports seamless browser control across different environments.

Features:

Simulates various browser interactions like navigation, form filling, and data extraction.

Offers comprehensive APIs for clicking, typing, and filling forms.

Supports both headed and headless modes for flexible browser automation.

Features native support for parallel execution across multiple browsers.

Includes integrated debugging tools and built-in reporting capabilities.

Advanced auto-waiting mechanism to manage asynchronous tasks.

Pros:

Provides a robust set of automation tools, making it the most comprehensive in its category.

Cross-platform, cross-browser, and cross-language compatibility enhance its versatility.

Maintained by Microsoft, ensuring regular updates and high reliability.

User interface is intuitive, supported by a consistent API across different programming languages.

Cons:

Initial setup can be complex and might require some technical knowledge.

Learning curve to fully utilize all features is steep, demanding time and effort.

Pricing:

- Free to use

ScraperAPI

ScraperAPI is a robust web scraping tool that simplifies the extraction of HTML from web pages, catering especially to scenarios involving JavaScript-rendered content and anti-scraping technologies.

Features:

Simple integration requiring only an API key and URL.

Supports JavaScript-rendered pages.

Advanced features like JSON auto parsing and smart proxy rotation.

Manages CAPTCHAs, handles proxies and browser specifics automatically.

Features like custom headers and automatic retries enhance scraping efficiency.

Geolocated proxy rotation helps route requests through various locations.

Unlimited bandwidth ensures fast and reliable scraping operations.

Provides a 99.9% uptime guarantee and professional support.

Pros:

Easy to use with extensive documentation available in multiple programming languages.

Highly customizable to fit user-specific needs.

Offers both free and premium proxy support.

Cons:

Some advanced features like worldwide geotargeting are only available with higher-tier plans.

May require some technical knowledge to fully utilize all functionalities.

Pricing:

Starts at $29 per month with 250,000 API calls and ten concurrent threads.

Premium plan at $49 per month includes unlimited bandwidth and additional features.

ScrapingBee

ScrapingBee is a premium web scraping API designed to simplify online data extraction tasks. It caters to developers, offering a user-friendly API that handles proxies and headless browser configuration, allowing users to focus solely on data extraction.

The API boasts a large proxy pool, aiding in bypassing rate-limiting restrictions and minimizing the risk of getting blocked by target websites.

Features:

Support for interactive websites requiring JavaScript execution.

Automatic anti-bot bypass, including CAPTCHA solving.

Customizable headers and cookies.

Geographic targeting for specific data localization.

XHR/AJAX request interceptions for comprehensive data extraction.

Flexible data export options in formats like HTML, JSON, and XML.

Scraping API call scheduling for efficient task management.

Pros:

Pay only for successful requests, ensuring cost-effectiveness.

Extensive documentation and abundant blog posts for easy integration and troubleshooting.

Simple configuration for scraping endpoints, reducing setup complexity.

Comprehensive feature set catering to diverse scraping needs.

Effective performance across a wide range of websites.

Cons:

Not the fastest scraping API available.

Limited concurrency, potentially affecting simultaneous scraping tasks.

Requires technical knowledge for optimal usage and configuration.

Pricing:

Starting at $49 per month for the entry plan

Have a limited free trial available for testing purposes.

ScrapingBot

ScrapingBot is a versatile web scraping tool that simplifies data extraction across various platforms, including e-commerce sites, search engines, and social media. It delivers data directly from HTML in a structured JSON format, making it suitable for a wide range of applications from market analysis to price monitoring.

Features:

Multiple APIs for targeted data scraping.

Straightforward data collection via URL input.

Proxy integration available.

Extensive documentation and support resources.

Compatible with other tools and services through API integration.

Pros:

Free plan available with monthly API credits.

Transparent pricing with no hidden fees.

Fast performance and reliable data delivery.

Supports JavaScript rendering and headless browsing.

Effective for both simple and complex data needs.

Cons:

No user dashboard for tracking usage statistics and managing subscriptions.

Limited support options, primarily through email.

Pricing:

- Free to use.

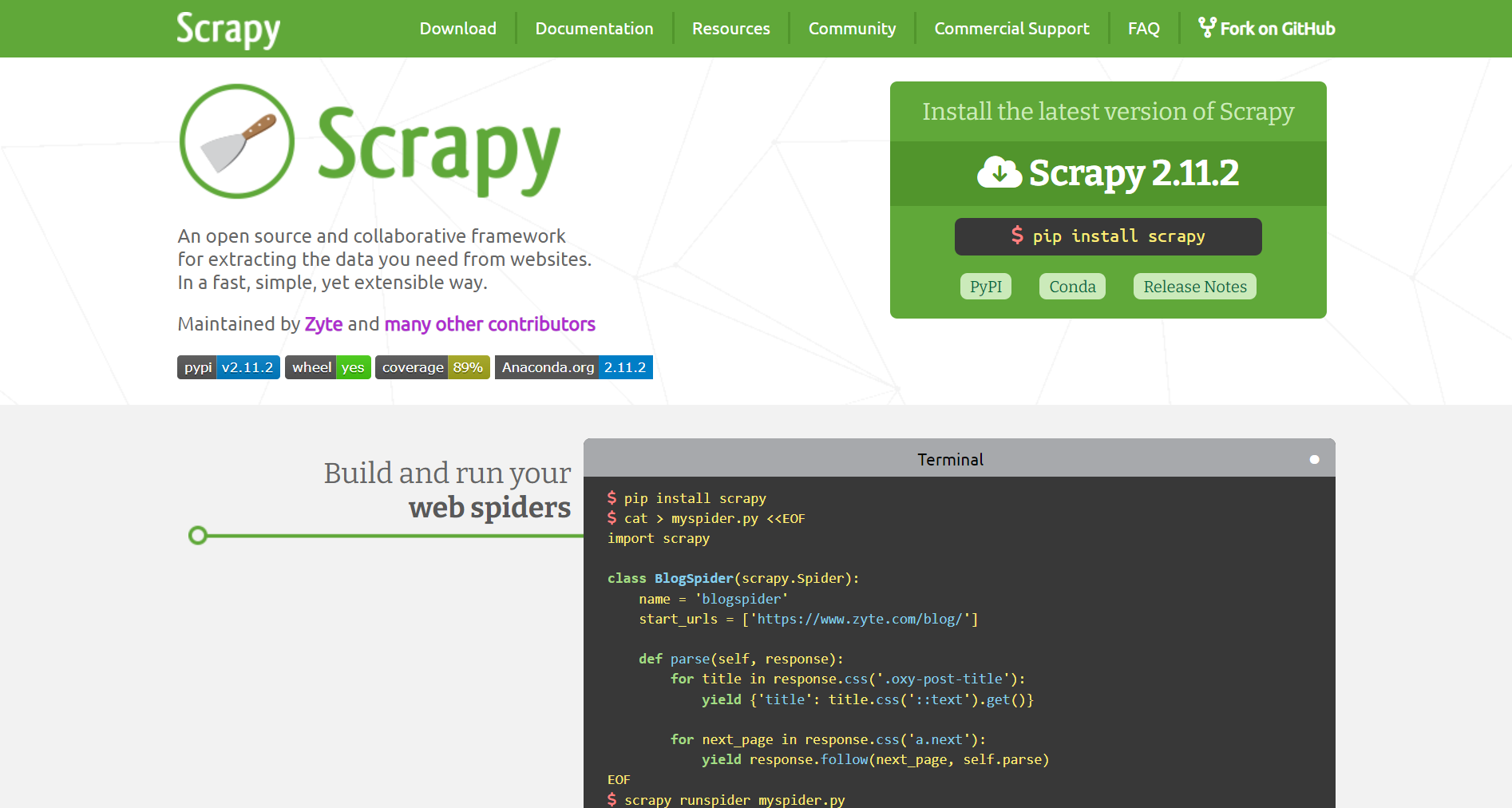

Scrapy

Scrapy is an open-source Python framework designed for high-speed web scraping and data extraction from websites. It is renowned for its efficiency and flexibility, making it ideal for both simple and complex data gathering tasks.

Features:

Support for CSS selectors and XPath expressions.

Offers built-in mechanisms like Selectors for data extraction and an integrated HTML parser.

Extensible via middleware, allowing for custom functionalities and integration with various proxies and APIs.

Pros:

Provides scalability, capable of handling large-scale scraping tasks with ease.

High-speed crawling and scraping framework.

Cons:

Requires coding knowledge, particularly in Python, which might be a hurdle for non-developers.

Limited in-built browser automation capabilities.

Scraping interacting sites requires the Splash integration.

Pricing:

- Completely free.

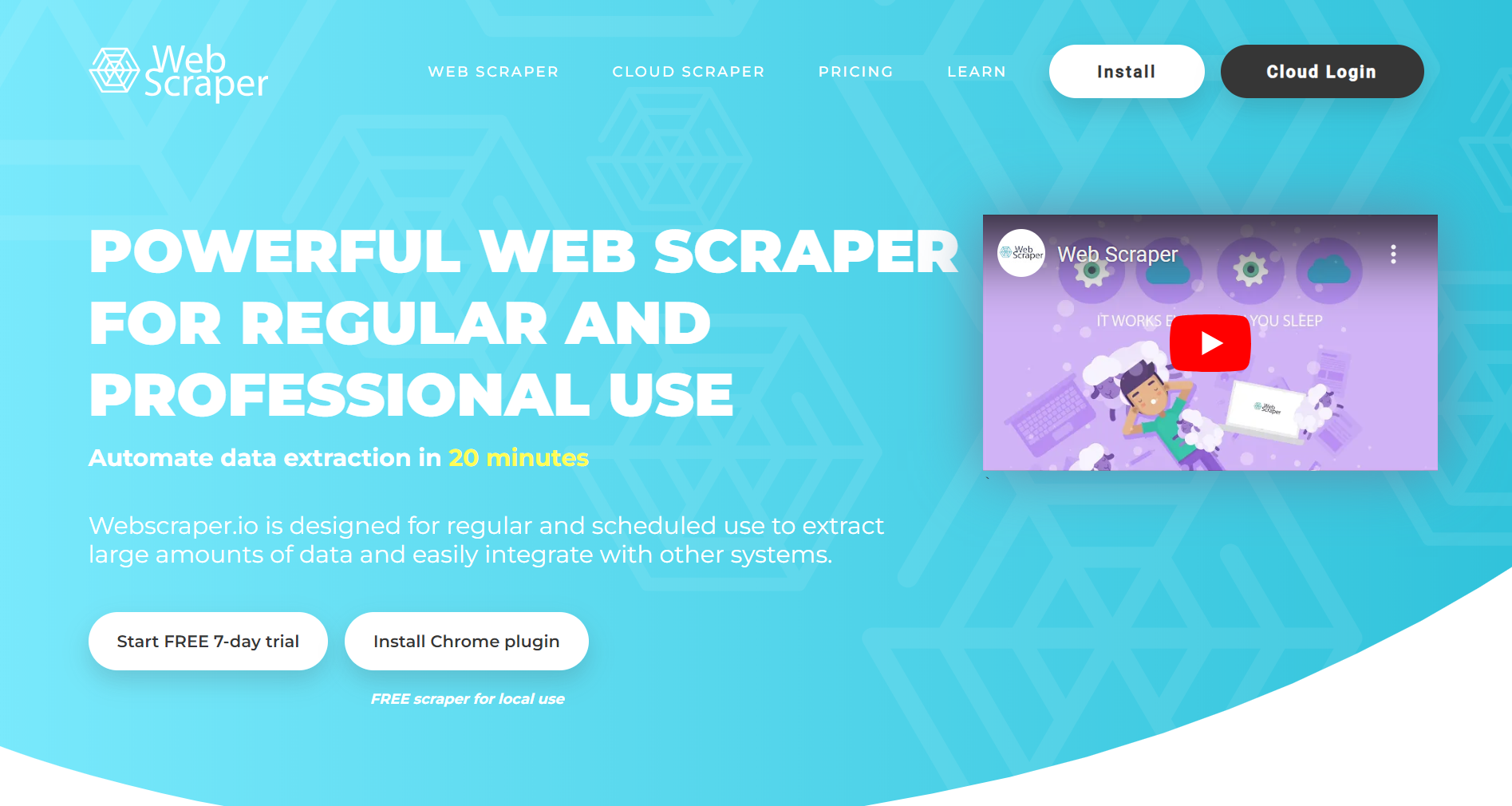

Web Scraper

Web Scraper is a versatile tool available both as a cloud-based service and a Chrome browser extension, catering to users who need simple and efficient data extraction. It is particularly friendly for beginners due to its easy-to-use interface. Users can extract data not only from static pages but also from dynamic websites, utilizing its powerful scraping capabilities directly in the browser or via the cloud.

Features:

Data extraction from dynamic websites, including those with complex categories and sub-categories.

Offers various export formats such as CSV, XLSX, and JSON.

Includes a scraping task scheduler for automating data extraction processes.

Proxy integration to manage IP rotation and avoid detection.

Point-and-click capabilities within the browser for manual data selection.

Pros:

Cloud-based, allowing remote access to extracted data through an API.

User-friendly interface that supports visual HTML element selection.

Suitable for basic scraping requirements with straightforward functionality.

Provides both local and cloud-based execution of scraping tasks.

Cons:

Limited concurrency, affecting the number of simultaneous scraping tasks.

Higher cost may not be affordable for small-scale users.

Some users have reported slow response times and internal server errors.

Lacks comprehensive video documentation and advanced support options.

Pricing:

Entry premium plan starts at $50 a month, with a free plan and trial available for initial use and testing.

Have a limited free trial available for testing purposes.

Summary Table

| Tool | Key Features | Pricing |

| Apify | Full-stack solution, pre-built scrapers, supports market research | From $49/month |

| Data Miner | Chrome extension, supports custom JavaScript | From $19.99/month |

| Octoparse | Point-and-click interface, handles dynamic sites | From $75/month |

| ParseHub | Extracts data from dynamic sites, REST API | From $149/month |

| Playwright | Simulates browser interactions, comprehensive APIs | Free |

| ScraperAPI | Simple API integration, supports JavaScript pages | From $29/month |

| ScrapingBee | Handles JavaScript sites, anti-bot measures | From $49/month |

| ScrapingBot | Supports various APIs, proxy support, easy API integration | Free |

| Scrapy | Supports CSS selectors and XPath, built-in HTML parser | Free |

| Web Scraper | Cloud service, extracts from dynamic sites | From $50/month |

Factors to consider when choosing web scraping tools

Selecting the right web scraping tool involves understanding several important factors to ensure it meets your specific needs for data collection.

Ease of Use

While many tools come equipped with helpful tutorials, it’s essential that the tool aligns with your technical comfort and requirements. Some tools are optimized for Windows, others for Mac OS, and each offers a different user experience. Choose a tool that you can use confidently and efficiently without a steep learning curve, ensuring it integrates well with your existing systems and workflows.

Pricing Transparency

Cost is a crucial consideration. Many tools offer free versions with limited features, while paid versions provide advanced functionalities. Always opt for tools with clear pricing and a free trial period to assess their capabilities before purchasing.

Supported Data Formats

Most web scraping tasks require handling formats like CSV, which is widely used and recognized, especially among those who regularly work with Microsoft Excel.

Additionally, a good scraping tool should support JSON for its simplicity and readability, as well as XML and sometimes SQL for more complex database interactions.

Performance and Flexibility

The best scraping tool should be capable of fast and efficient data extraction, with the ability to interface smoothly with websites through APIs and manage multiple proxies.

Opting for an open-source tool can provide the flexibility needed for customizing scraping activities to suit unique project demands.

Customer Support

Reliable customer support is indispensable. Opt for tools that provide reliable and accessible customer service, ideally available 24/7. Good support can make a significant difference, especially when you encounter technical challenges or need guidance on optimizing your scraping setup.

Conclusion

When you're using web scraping tools, it's important to be aware of browser fingerprinting. These fingerprints are like robotic traits that websites can identify. If detected, a site might block your IP or prevent you from accessing the data you need.

This is where BrowserScan comes into play. It features a robot detection page that checks for these robot characteristics. If your script fails the BrowserScan test, it will show you different results. You can use these results to improve your automation scripts.