Fixed:How to Avoid Selenium Detection

Selenium is a powerful automated testing tool that is also widely used in crawling and data fetching because it can handle JavaScript rendering, Ajax requests, or complex user interactions. Sometimes, however, you may encounter cases where Selenium is detected and blocked by the target website.

But don’t worry — there are ways to bypass these detection methods. In this guide, we’ll explain how websites detect Selenium and offer practical tips to help you keep your scripts running undetected.

How Websites Detect Selenium

When using Selenium for web automation, it’s crucial to understand how websites detect such tools to effectively avoid detection. Here’s a detailed look at common detection techniques and what they entail.

window.navigator.webdriver Detection

The window.navigator.webdriver attribute is embedded by browser automation tools—like Selenium WebDriver—into the navigator object of the global window object within a browser. It conveys three potential states:

True signifies that the browser is currently under the control of an automation tool.

False indicates that the browser is operating independently, without automation.

Undefined means there’s no clear indication whether automation tools are at play.

When Selenium WebDriver initiates a browser session, it typically sets this attribute to true. This is to enable websites to identify automated visits through JavaScript. Under normal circumstances, during human browsing, this attribute would be false or undefined. However, if Selenium is utilized without altering the window.navigator.webdriver attribute, it will read as true by the website. Should a website detect an automated visit, it may trigger specific defensive actions, such as displaying CAPTCHAs, restricting access to pages, logging incidents, or issuing alerts.

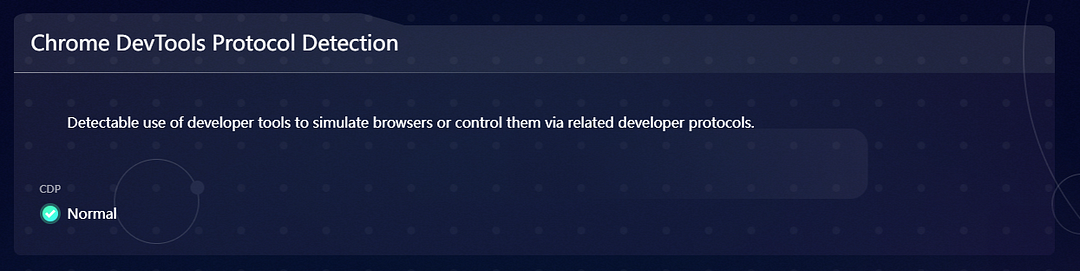

Chrome DevTools Protocol (CDP) Detection

The Chrome DevTools Protocol (CDP) is a set of tools built into Chrome browsers that developers use to inspect and debug their websites. When using Selenium, you might use CDP to simulate or control browser behaviors more closely.

Websites can detect the use of CDP by monitoring commands that are unusual or typically not generated by regular users.These might include unusual alterations to the document object model (DOM), or specific combinations of API calls that regular users seldom make.

Additionally, detection mechanisms may include monitoring network activity on debugging ports, such as port 9222, which is commonly used for remote debugging when enabled. They may also detect patterns such as batch operations on forms, which typically indicate automated scripts rather than human interactions.

Analyzing User-Agent Strings

The user-agent string tells a website what type of device and browser is accessing it. Automated scripts sometimes use a default or unusual user-agent string, which can be a clear sign of automation if the string includes terms like ‘Selenium’ or unusual browser versions. Changing the user-agent string to mimic a popular browser can help avoid this type of detection.

Page Load Time and Interaction Patterns (User Behavior Analysis)

Automated scripts often interact with web pages differently from humans, websites can detect automation by analyzing these behaviors, such as:

Scrolling and Clicking: Bots may scroll smoothly and uniformly, unlike the erratic patterns of human users. And the automated clicks often occur at consistent intervals and exact screen positions, contrasting with the more variable timing and accuracy of human clicks.

Page Dwell Time: Scripts typically spend uniform or minimal time on pages, unlike humans who vary based on interest.

Timing of Requests: Bots may operate during off-peak hours like late nights or early mornings.

Request Intervals: Automated scripts might make requests at regular, predictable intervals, unlike the sporadic patterns of human activity. Especially when they use high request rates with the same user-agent string, that will be easily detected by websites.

Browser Fingerprint Detection

Browser fingerprint is a unique identification of the user generated by websites. They create a unique profile of a browser by collecting details like the browser version, screen resolution, fonts, and plugins. This unique profile acts like an online fingerprint, so it’s also called a browser fingerprint. Automated scripts often lack a personalized browser configuration, which makes them easy to spot because they match the typical patterns of automated tools or web crawlers.

How to Fix If Selenium is Detected

Although websites are increasingly implementing strict anti-scraping measures, there are still some ways to cope. But please be careful not to break the law or cause excessive strain on the website server

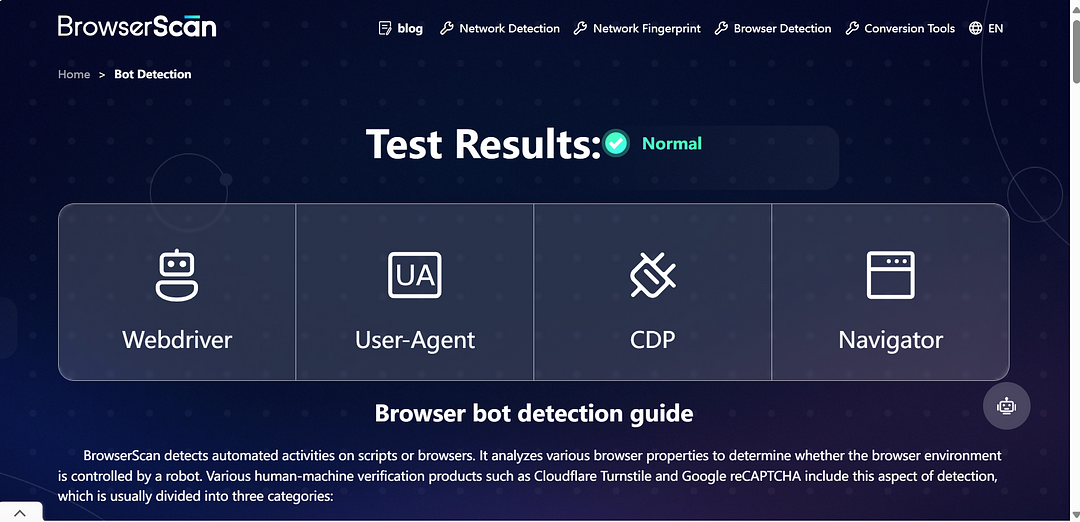

1. Use BrowserScan’s Bot Detection Feature

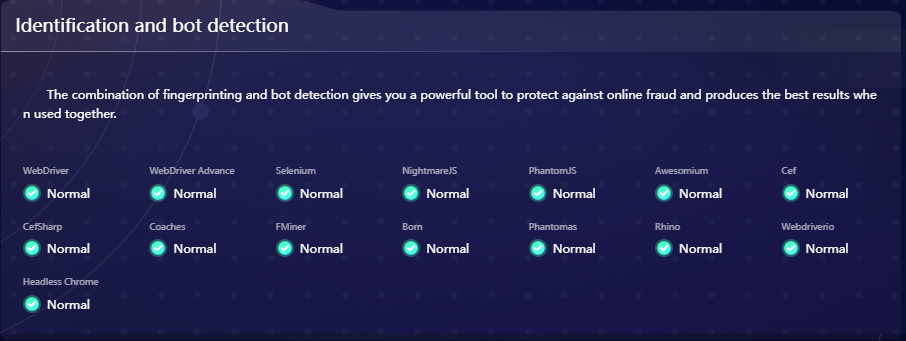

If you find out that your Selenium scripts are getting detected, BrowserScan’s bot detection feature can help. It primarily focuses on these four key areas, which cover most of the parameters or vulnerabilities that can be detected by the website:

WebDriver: Check your browser for signs that it is controlled by WebDriver

User-Agent: Check if your user-agent string looks like a real user, or if it gives away that you’re using a script.

CDP (Chrome DevTools Protocol): Accurately detect the use of developers to simulate/control the browser.

Navigator Object: Verifies the Navigator for any signs of spoofing by identifying inconsistencies that deviate from normal user data patterns.

Before running your scripts and as part of regular updates, it is recommended to to test them with BrowserScan. So that you can adjust settings and make your scripts stealthier, and reduce the risk of detection and ensure your automation tasks can run without interruptions.

2. Modify WebDriver Properties

As discussed, the key to a website identifying a visit from an automated script is by comparing its actions to those of an average human user. To avoid detection and potential restrictions by websites, it’s crucial to make your Selenium scripts emulate human behavior as closely as possible.

First begins at the configuration level, where you can simulate the traits of a typical user. The following is a list of adjustable WebDriver properties that can help you lower the risk of detection, while improving the performance of your scripts.

1). Disable the window.navigator.webdriver Flag

You can tell your browser to hide its automation status from websites. Add the --disable-blink-features=AutomationControlled flag when launching your browser, it will make the window.navigator.webdriver property false.

2). Employing Headless Browsing with Stealth Parameters

Running your browser in headless mode signifies that the browser operates without a graphical user interface, essentially working in the background. This mode is harder for websites to detect because it operates without the full browser interface, which typically includes elements that websites use to identify automated tools.

You can operate it by adding “--headless" parameter. In addition, here are some parameters that can enhance the stealthiness of your headless browsing, you can use as needed:

--incognito: This flag can initiate the browser in incognito mode, which prevents the browser from storing any cookies, cache, or browsing history.--disable-gpu: While GPU hardware acceleration can improve rendering performance in a full browser, it is often unnecessary in headless mode. Turns off GPU hardware can reduce the browser's footprint and free up resources to optimize the performance of script running.--no-sandbox: This feature is especially handy when you run your browser in a confined space like a container or a restricted environment. It switches off Chrome's sandbox, which is a special area where the browser is kept safe. By doing this, it helps the browser skip around the operating system's security checks, keeps the browser processes separate, and cuts down on the sandbox causing errors or crashes.

3). Set a Custom User-Agent String

You can use custom User-Agent to mimic a popular browser version, which make your Selenium-driven browser appear as a regular user’s browser. Changing the user-agent string can make your browser look like a different device, This is useful to blend in with regular traffic. Here‘s an Example Code contains all setting mentioned above:

Example Code:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

#The Options class is used to set the startup options of the Chrome browser.

options = Options()

options.add_argument("--disable-blink-features=AutomationControlled")

#Disable the window.navigator.webdriver Flag

options.add_argument("--headless")

options.add_argument("--disable-gpu")

options.add_argument("--no-sandbox")

options.add_argument("--incognito")

user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36"

options.add_argument(f'user-agent={user_agent}')

# defines a user agent string and passes it to Chrome as a startup parameter

driver = webdriver.Chrome(options=options)

# Launch Chrome using all the options you set earlier.

3. Load Extensions

Extensions can change your browser’s behavior or manage tasks like ad blocking, which can also help mimic a typical user environment.

Example Code:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

options.add_extension('path/to/extension.crx')

#Load Chrome extension from a specified path

driver = webdriver.Chrome(options=options)

4. Use Proxy IPs

Using proxies can hide your IP address, making it harder for your automation scripts to be tracked and blocked. This allows you to switch to a different IP address if the current one is blocked by the target website.

Next, we will show you an example code that launches a Chrome browser, with all HTTP and HTTPS requests routed through the specified proxy server.

Example Code:

from selenium import webdriver

from selenium.webdriver.common.proxy import Proxy, ProxyType

proxy_ip = '192.168.1.1:8080'

# Replace with a valid proxy IP

proxy = Proxy()

proxy.proxy_type = ProxyType.MANUAL

proxy.http_proxy = proxy_ip

proxy.ssl_proxy = proxy_ip

# Set the proxy type to manual

# Set the HTTP proxy and HTTPS proxy to proxy_ip as defined above

capabilities = webdriver.DesiredCapabilities.CHROME

proxy.add_to_capabilities(capabilities)

#CHROME is a predefined dictionary that contains the default capabilities required to launch the Chrome browser

driver = webdriver.Chrome(desired_capabilities=capabilities)

5. Simulate User Behavior

To simulate human-like behavior in bots, a regular practice is to emulate natural interactions with the mouse and keyboard, as well as to vary the timing of these interactions.

In the following sections, we will discuss methods for achieving this in two distinct aspects:

1). Mimicking Mouse and Keyboard Operations

Many developers might use ActionChains for user actions such as moving the mouse and entering text. However, there are limitations to its send_keys() method when it comes to actions that require keyboard input.

In some cases, the send_keys() method of the ActionChains class may fail to trigger JavaScript events, or it may trigger them in a manner that differs from actual user input, which can easily lead to the action being identified as a bot script.

If your target website involves a lot of JavaScript interactions, you can use the IJavaScriptExecutor interface to address this issue according to your specific needs.

2). Randomizing Actions

Varying how long your script spends on pages or how it interacts with elements can also help avoid detection. The following code contains a simple delay, which introduces randomness into the script’s behavior, making it less predictable and more like a human user.

For further requirement, you can set different time intervals for each action, such as 5 seconds after the completion of one visit before the next, 3 seconds after each click action, and so on.

Example Code:

import time

import random

time.sleep(random.randint(5, 10))

# Random delay between 5 and 10 seconds

6. Employ the Undetectable Chromedriver Library

Undetectable Chromedriver is a Python library that encapsulates the Selenium ChromeDriver, designed to specifically simulate real user browser behavior for automated scripts, reducing the risk of detection of automated tools.

It seamlessly integrates with the Selenium WebDriver API, offering comprehensive functionality to evade common automated detection methods.

Example Code:

import undetected_chromedriver.v2 as uc

driver = uc.Chrome()

driver.get('https://www.example.com')

# Replace with a valid URL

7. Use the excludeSwitches Parameter

In this example, we will use a simple code snippet to illustrate the use of Selenium WebDriver’s add_experimental_option method. This method enables users to set experimental browser options by supplying key-value pairs.

The excludeSwitches option specifies a list of switches to exclude during browser startup. And the enable-automation parameter which included in this list prevents the browser from using automation control features, making the browser's behavior mimic that of a typical user and thus evading basic bot detection mechanisms that analyze user behavior.

Example Code:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

options.add_experimental_option("excludeSwitches", ["enable-automation"])

#Exclude enable-automationparameter

driver = webdriver.Chrome(options=options)

driver.get('https://www.example.com')

# Replace with a valid URL

Why Can My Selenium Crawler Be Easily Detected?

High Request Frequency and Repetitive Patterns

One common reason your Selenium bot might get caught is due to high request frequency. If your script sends requests too quickly or keeps hitting the same page, it raises red flags. This behavior doesn’t match how humans browse the web. In addition, if your script is scraping data 24 hours a day, it will also be recognized as a bot. To fix this, try to space out your requests, vary the pages you visit and set the script to run at a normal time.

Crawling Only the Source Code

Many websites use JavaScript to dynamically generate content. If your bot only crawls the initial source code and ignores JavaScript-rendered content, it might miss out or act differently than a human user. Make sure your Selenium setup can execute and interact with JavaScript just like a regular browser.

Consistent Browser and OS Configuration

Using the same browser setup for all your crawling tasks can make your bot easy to spot. Websites can detect patterns in browser fingerprints, which include details like your browser version, operating system, and even screen resolution. To avoid detection, consider rotating between different browser configurations.

Blocked IP Addresses

Lastly, if you use the same IP address for multiple sessions or too many requests, websites might block it. This is a common method sites use to limit scraping activities. Using proxy IPs can help you avoid this issue by rotating your apparent location and identity.