What is Beautiful Soup?

Beautiful Soup is a powerful Python library for web scraping purposes. It provides tools for extracting data from HTML and XML files, making it easier to collect information from web pages. When you use Beautiful Soup, you work with a 'parse tree', which is like a map of the document's structure. This map allows you to find and work with the data you need.

Core Features

Efficient Data Extraction

Beautiful Soup simplifies the process of extracting data. You can find tags based on their names, attributes, and navigate through a document to find all instances of a tag. For example, if you want to gather all the hyperlinks from a webpage, Beautiful Soup lets you find all the <a> tags and access their href attribute.

Handling Different Parsers

One of the strengths of Beautiful Soup is its ability to work with multiple parsers. This means you can choose the parser that is the best fit for your specific task. For example, if you need speed, you might choose lxml, or if you need to parse malformed HTML, html5lib could be the best option.

Automatic Encoding Handling

Encoding can be a headache in web scraping. Beautiful Soup automatically handles encoding issues. It converts documents to Unicode, which is a standard format for text, and ensures that the output is in UTF-8, which is widely used on the web. This feature saves time and reduces the risk of encountering encoding-related errors.

Gracefully Dealing with Bad HTML

Web pages on the internet often have incomplete or broken HTML. Beautiful Soup is designed to deal with this messy HTML and still allow you to extract the data you need. It uses its parsing abilities to make sense of the broken HTML and provide you with accessible data.

What is Scrapy?

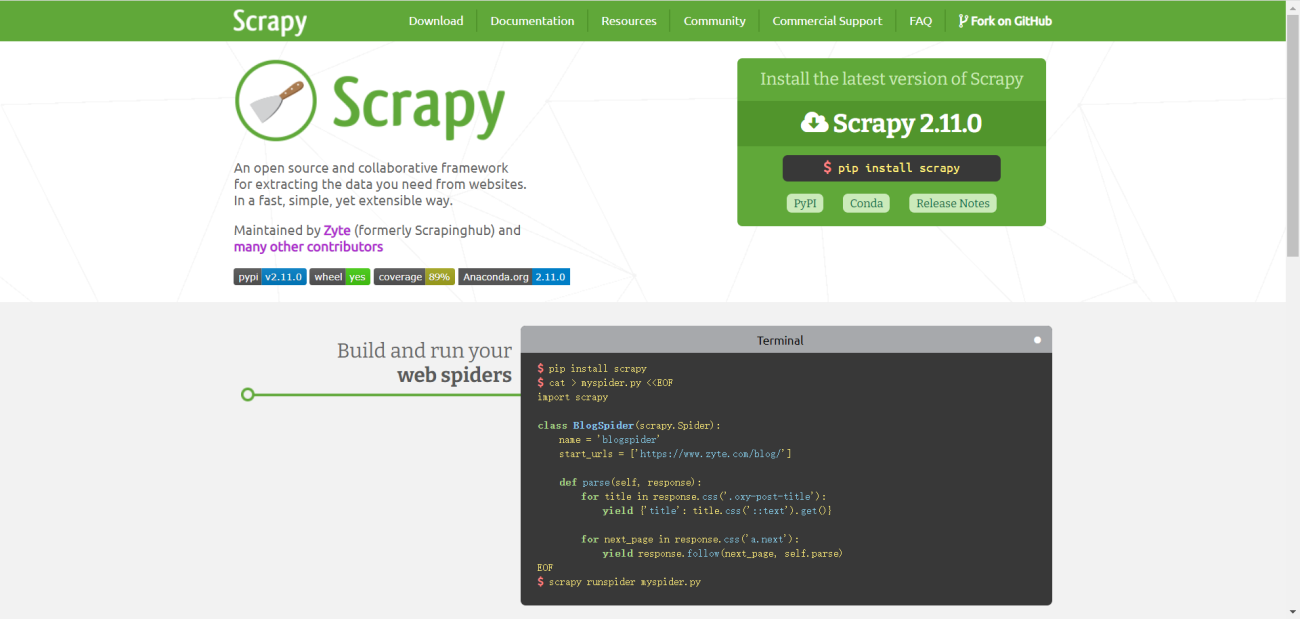

Scrapy is an open-source and collaborative framework for extracting the data you need from websites. It's built on top of Python and provides a fast and efficient way to scrape web pages. Unlike Beautiful Soup, which is just a library, Scrapy is a complete framework that handles all aspects of web scraping, from sending requests to parsing HTML.

Core Features

Robust Web Crawling with Scrapy

Scrapy is designed to crawl websites at a large scale and process large amounts of data. It can navigate through pages and collect structured data from different URLs efficiently. This makes it ideal for projects that require collecting data from many web pages or entire websites.

Built-in Features for Speed and Ease

Scrapy comes with several built-in features that make web scraping a smoother process. It has tools for handling requests, following links, and exporting scraped data in various formats. With Scrapy, you can write your web scraping code once and use it to scrape many different websites.

Customizable and Extensible

One of the key advantages of Scrapy is its flexibility. It allows you to customize the scraping rules and logic to fit the specific requirements of the website you're targeting. Additionally, Scrapy has a rich collection of built-in extensions and middleware that you can enable or disable to add functionality like handling cookies or user agents.

Dealing with Dynamic Content

Scrapy is capable of handling dynamic content generated by JavaScript. By integrating with tools like Splash, Scrapy can render pages just like a browser does, which means it can scrape data from websites that rely heavily on JavaScript for their content.

Pros and Cons: Beautiful Soup vs Scrapy

| Aspect | Beautiful Soup | Scrapy |

| Language | Python | Python |

| Ease of Setup | Quick to install and easy to start using. Great for beginners. | Takes more time to set up and learn, especially for those new to programming. |

| Learning Curve | Simple for those with basic Python knowledge. | Steeper learning curve but offers comprehensive documentation to help users. |

| Flexibility | Works well for simple, small-scale scraping tasks. | Highly customizable and can be tailored to fit complex scraping needs. |

| Data Handling | Easy extraction of data from a webpage. | Offers built-in options for exporting data in formats like CSV, JSON, and XML. |

| Speed | Slower compared to Scrapy, as it's not optimized for speed. | Faster because it's an asynchronous framework, meaning it can handle many tasks at once without waiting for each one to finish. |

| Asynchronous requests | Does not support asynchronous requests natively. | Natively supports asynchronous requests, allowing faster data processing. |

| Crawling | Mainly used for parsing and extracting data from single pages. | Designed to crawl entire sites and follow links automatically. |

| Browser Support | Does not interact with browsers; only parses static HTML content. | Can interact with browsers through third-party tools for dynamic content. |

| Headless Execution | Can be used with headless browsers via third-party tools like Selenium. | Supports headless browser execution natively for scraping dynamic sites. |

| Browser Interaction | Limited interaction with webpages; mainly for parsing static content. | Can simulate browser interaction with forms, cookies, and sessions. |

| Javascript Content | Struggles with JavaScript-heavy websites unless combined with other tools like Selenium. | Can handle dynamic content by integrating with tools such as Splash to scrape JavaScript-generated content. |

| Proxy Support | Can be implemented manually or with additional packages. | Built-in support for using proxies to make requests from different IP addresses. |

| Middleware/Extensions support | Requires third-party libraries for additional features. | Rich collection of built-in middleware and extensions for enhanced scraping capabilities. |

| Scalability | Not designed for very large projects or handling multiple requests concurrently. | Built for handling large-scale data extraction and can manage multiple requests at the same time. |

| Community Support | Has a large community and plenty of resources for troubleshooting. | Also has strong community support and many resources, including detailed documentation. |

Learning Path: Beautiful Soup and Scrapy

Learning Path for Beautiful Soup

When diving into web scraping with Beautiful Soup, your first step should be to get a solid understanding of Python. Python is the foundation upon which Beautiful Soup is built, and it's essential to be comfortable with it. Next, focus on the fundamentals of HTML and CSS, as these are the building blocks of web pages that you'll be interacting with. It's important to know how to identify the elements you want to scrape.

Once you've grasped these basics, you can start exploring the Beautiful Soup library. Begin by reading through the official documentation and working through the examples provided. This will give you a practical understanding of how to use Beautiful Soup to parse HTML and extract the data you need.

As you become more confident, it's crucial to apply what you've learned to real-world projects. Start small, perhaps by scraping data from a blog or a weather website. As you grow more skilled, you can take on more complex projects that require you to navigate multiple pages or handle forms and logins.

Resources for Learning Beautiful Soup

Codecademy Python Course: https://www.codecademy.com/learn/learn-python-3

Python.org's Beginner's Guide: https://docs.python.org/3/tutorial/index.html

W3Schools HTML Tutorial: https://www.w3schools.com/html/

W3Schools CSS Tutorial: https://www.w3schools.com/css/

Official Beautiful Soup Documentation: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

Stack Overflow Beautiful Soup Questions: https://stackoverflow.com/questions/tagged/beautifulsoup

Real Python Web Scraping Tutorials: https://realpython.com/tutorials/web-scraping/

Learning Path for Scrapy

For Scrapy, which is a more comprehensive framework for web scraping, you should start by deepening your Python knowledge. Scrapy is powerful but also complex, and a good command of Python will help you make the most of its capabilities. Understanding web protocols like HTTP is also key, as Scrapy interacts with websites at a deeper level than Beautiful Soup.

Once you're ready, the Scrapy official tutorial is the best place to begin. It walks you through creating a simple spider to scrape a website and teaches you the basics of selecting and extracting data. From there, delve into the Scrapy documentation to learn about the various components of Scrapy, such as items, middlewares, and the item pipeline.

Building your own spiders and incrementally adding complexity is an excellent way to learn. Try to scrape websites that require handling cookies, sessions, and even JavaScript. Remember, Scrapy is well-suited for large projects, so don't shy away from ambitious tasks.

Resources for Learning Scrapy

Python for Beginners: https://www.pythonforbeginners.com/

Automate the Boring Stuff with Python: https://automatetheboringstuff.com/

MDN Web Docs - HTTP: https://developer.mozilla.org/en-US/docs/Web/HTTP

Scrapy Official Tutorial: https://docs.scrapy.org/en/latest/intro/tutorial.html

Scrapy Documentation: https://docs.scrapy.org/en/latest/

DigitalOcean Scrapy Tutorials: https://www.digitalocean.com/community/tags/scrapy

Scrapy Users Mailing List: https://groups.google.com/forum/#!forum/scrapy-users

GitHub Resources for Web Scraping

The GitHub resources listed provide a wealth of information and examples for both Beautiful Soup and Scrapy. These repositories can offer guidance, code snippets, and even fully functional scraping solutions that you can study and learn from.

By following these learning paths and utilizing the resources and GitHub repositories provided, you'll be well on your way to mastering web scraping with Beautiful Soup and Scrapy. Remember to start with the basics, practice regularly, and progressively tackle more complex projects to enhance your skills.

Beautiful Soup Repository: https://github.com/wention/BeautifulSoup4

This repository contains the Beautiful Soup library and examples that are helpful for beginners.

Scrapy Repository: https://github.com/scrapy/scrapy

The official Scrapy framework repository where you can find the source code, issues, and contributions.

Awesome Web Scraping List: https://github.com/lorien/awesome-web-scraping

A curated list of awesome web scraping tools, libraries, and software for different programming languages.

Scrapy Book: https://github.com/scalingexcellence/scrapybook

Repository for the book "Learning Scrapy" with code examples.

Python Web Scraping Resources: https://github.com/istresearch/scrapy-cluster

This repository provides a Scrapy and Kafka-based framework for large scale web scraping.

Beginner's Guide to Web Scraping: https://github.com/eugeneyan/applied-ml

Contains a section on web scraping with Python, including Beautiful Soup and Scrapy.

Scrapy Middleware: https://github.com/croqaz/awesome-scrapy

A list of middlewares and extensions available for Scrapy, which can enhance the functionality of your spiders.

Conclusion

When deciding whether to use Beautiful Soup or Scrapy for your web scraping needs, think about the specific tasks you need to accomplish. Here's how to choose the right tool based on different scenarios:

Use Beautiful Soup if:

You're working on a simple project that involves extracting data from a single webpage.

You need to quickly prototype something without setting up a complex project.

The website you're scraping is static and doesn't require interacting with JavaScript.

You're looking to extract data from a local HTML file or a small set of HTML files.

Use Scrapy if:

Your project requires crawling multiple pages or entire websites.

You need to handle complex data extraction, follow links, and manage requests efficiently.

The website you're scraping is dynamic, and you need to interact with JavaScript or handle cookies.

You're considering scaling up your scraping project or integrating it into a larger pipeline.

By understanding these scenarios, you can better decide which tool is suited for your specific web scraping task. Beautiful Soup is excellent for straightforward, smaller-scale scraping, while Scrapy excels in more complex, large-scale scraping operations. Choose the tool that aligns with your project requirements, and you'll be on your way to successfully scraping the data you need.

For those of you using tools to automate your web scraping, it's smart to check how websites might react to your browser. With BrowserScan's "Bot Detection", you can find out if you seem like a bot. This can help you make changes so that your scraping doesn't get stopped and can keep going smoothly.

Read Also